LayarHijau – On December 16, film fans were stirred by the circulation of a video claimed to be a trailer for Avengers: Doomsday. The video depicted the fate of Captain America/Steve Rogers after the events of Endgame, quickly drawing widespread attention.

The video soon spread and went viral across multiple platforms, including China’s social media platform Weibo. Several media outlets then reported that Marvel Studios had actively filed takedown requests to remove the video from various platforms on the grounds of copyright infringement.

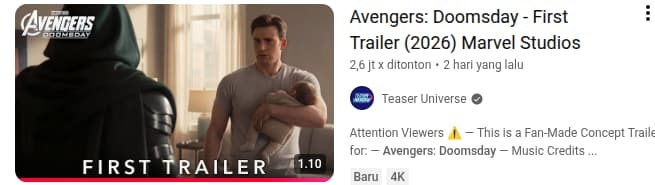

However, when LayarHijau attempted to trace the leaked trailer on YouTube, the results told a different story. Instead of finding the alleged trailer, YouTube was flooded with numerous AI-generated fake trailers. These videos varied in quality, with some appearing convincing enough to mislead casual viewers.

One fake trailer uploaded just two days earlier had already amassed more than 2.6 million views. The video appeared to combine leaked clips previously circulating on Weibo, but with noticeably improved image quality. Viewers began to raise suspicions after spotting spelling errors in the on-screen text, as well as the appearance of Dr. Doom at the end of the trailer—something that has never been officially confirmed.

Fake trailers are not a new phenomenon. Even before the rapid advancement of AI technology, such videos frequently circulated online. The difference now is that AI has enabled these fake trailers to achieve a much higher level of realism, making them increasingly difficult to distinguish from official content.

Beyond fake trailers, YouTube is also filled with other types of fabricated videos, including fake post-credit scenes. For instance, after the announcement of Robert Downey Jr.’s return to the MCU as Dr. Doom in Avengers: Doomsday, videos claiming to be post-credit scenes from several recent Marvel films began circulating. These videos were deliberately presented in low resolution, as if recorded inside a movie theater, to create a sense of authenticity.

For dedicated Marvel and DC fans, distinguishing between official and fake content is relatively easier, as they are familiar with reliable sources. However, for general audiences, such videos are often difficult to identify as false.

So how has the industry responded to the surge of AI-generated fake trailers?

According to investigative reports by several international media outlets, it was revealed in 2025 that some major Hollywood studios adopted a more pragmatic approach when dealing with high-traffic fake trailers. Rather than removing all such content outright, some studios reportedly used YouTube’s copyright claim system to redirect advertising revenue from these videos.

This approach was neither applied openly nor uniformly, and it was never announced as an official policy. However, the practice drew criticism, particularly from the actors’ union SAG-AFTRA, which argued that it could encourage the misuse of AI technology and the exploitation of actors’ likenesses.

The situation began to change toward the end of 2025. YouTube then took firm action against channels that consistently uploaded AI-generated fake movie trailers while presenting them as official content.

On December 19, 2025, YouTube permanently shut down several major channels, including Screen Culture and KH Studio. Both channels were widely known for producing AI-based fake trailers that combined clips from older films, digitally manipulated visuals, and misleading titles and presentations.

Previously, both channels had been suspended in March 2025. They were later allowed to rejoin the YouTube Partner Program on the condition that they clearly stated their videos were merely “concept trailers” or “fan-made,” rather than official trailers. However, after regaining monetization, the two channels reportedly removed those disclaimers and once again presented their fake videos as if they were official content.

These actions were deemed serious violations of YouTube’s policies, particularly regarding spam and misleading metadata, as the titles, descriptions, and presentation were deliberately crafted to deceive both the algorithm and viewers. As a result, YouTube decided to permanently terminate their accounts.

The shutdown was later confirmed by YouTube to CNET. In its statement, a YouTube spokesperson explained that although both channels had previously made improvements to regain monetization, they subsequently violated platform policies again. According to a Deadline report cited by CNET, Screen Culture and KH Studio, based in India and Georgia respectively, had a combined total of around two million subscribers and collectively accumulated more than one billion views before being shut down.

YouTube’s move coincided with a shift in Disney’s stance. In December 2025, Disney reportedly sent a formal warning letter to Google demanding the removal of AI-generated videos using its characters. This marked a transition from a passive approach to active takedown efforts on Google-owned platforms.

The phenomenon of AI-based fake content has proven even more difficult to address in the context of Asian entertainment, particularly Chinese dramas. Over the past few years, LayarHijau has identified numerous fake videos related to Chinese dramas circulating on YouTube.

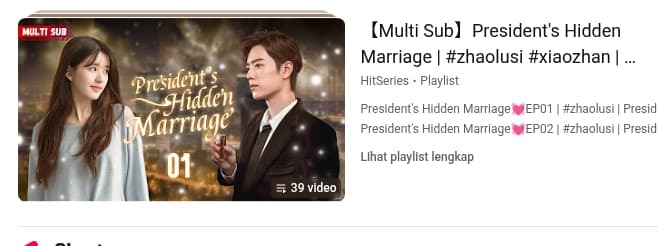

One of the most common practices involves fake poster thumbnails pairing popular actors, such as Zhao Lusi and Xiao Zhan, as if they were starring in a drama together. Upon closer inspection, these videos turn out to contain unrelated and lesser-known dramas. Such videos are often monetized and uploaded in full-length format, allowing them to continue generating advertising revenue.

In addition, various hoax news videos have also circulated. For example, following the tragic death of Yu Menglong, rumors emerged claiming that Yang Mi had attempted to recruit the actor into her agency three times. Shortly afterward, another hoax video claimed that Yang Mi had been involved in an accident. One of these hoax videos was viewed nearly 600,000 times.

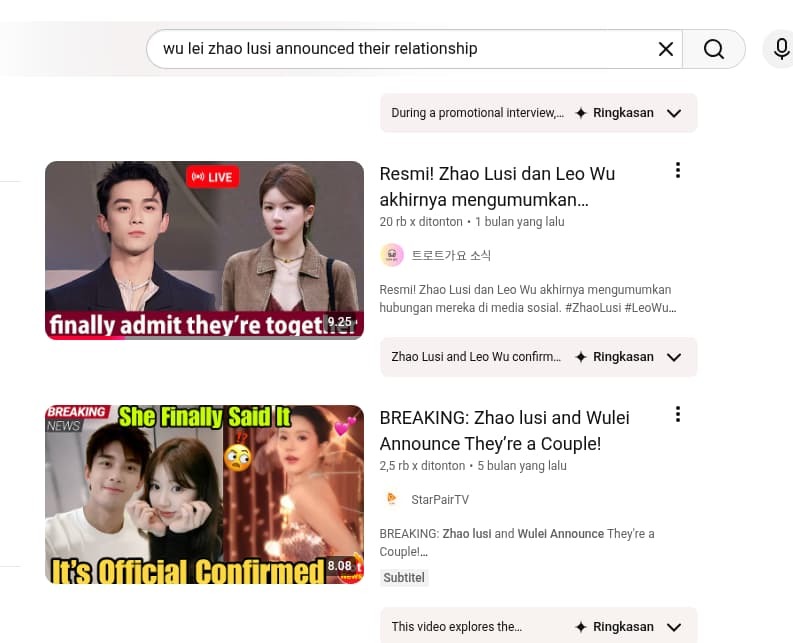

Another example involves a hoax video claiming that Wu Lei and Zhao Lusi had officially announced their romantic relationship. A YouTube channel with a Korean-language username uploaded a video titled “It’s official! Zhao Lusi and Leo Wu have finally gone public with their relationship on social media.”

The video garnered more than 20,000 views, while the channel had approximately 19.7 thousand subscribers. Upon viewing, the video consisted of a mix of genuine photos and edited images, accompanied by AI-generated voice narration.

Audience responses to such videos varied. Some viewers offered congratulations without realizing the information was false, while others attempted to warn that the video was a hoax.

AI-generated fake videos and hoax news often gain wide viewership not simply because they appear convincing, but because of how they are packaged. Sensational titles, provocative thumbnails, and claims of being “official” or “exclusive leaks” trigger curiosity and encourage quick clicks. In many cases, platform algorithms further accelerate their spread due to high early engagement, without adequately distinguishing between valid information and misleading content.

Amid these conditions, the boundaries between entertainment, speculation, and manipulation are becoming increasingly blurred. Videos created from the outset as hoaxes or AI fabrications not only mislead viewers but also risk shaping long-term misconceptions, especially when clarifications fail to reach audiences as large as those of the original videos.

For Chinese drama fans, verifying information related to idols and the Chinese entertainment industry is far from easy, particularly for those unfamiliar with trustworthy official sources.